Jennifer DeStefano recounted receiving a gut-wrenching telephone call from an unknown number that absolutely horrified her.

To her disbelief, she heard what sounded like the panicked voice of her oldest daughter, Briana, begging to be saved from kidnappers.

“I hear her saying, ‘Mom, these bad men have me. Help me. Help me. Help me.’ And even just saying it just gives me chills,” DeStefano recounted in an interview with ABC News.

Fortunately, Briana was safe and sound.

It turned out that scammers had employed artificial intelligence (AI) to mimic Briana’s voice, aiming to extort money from her terrified family.

This incident is just one example of an alarming trend.

Check Point Technologies, one of the largest cybersecurity firms in the country, has reported a substantial increase in AI-based scams and attacks compared to the previous year.

According to the FBI Internet Crime Complaint Center, phone and cyber scams drained approximately $10 billion from Americans’ pockets in 2022.

When DeStefano received the distressing call on January 20, her 15-year-old daughter was on a ski trip, even as the scammer demanded a staggering $1 million.

The incident raised questions about the caller’s identity and how they could convincingly impersonate Briana, even fooling her mother.

Experts are sounding the alarm on such scams.

They said scammers only need a few seconds of social media content to recreate someone’s voice using artificial intelligence.

Rapid advancements in generative AI technology by companies like OpenAI and Midjourney have forced organizations to grapple with how to harness the potential of the technology while addressing concerns about the ease with which users can manipulate language, audio, and video.

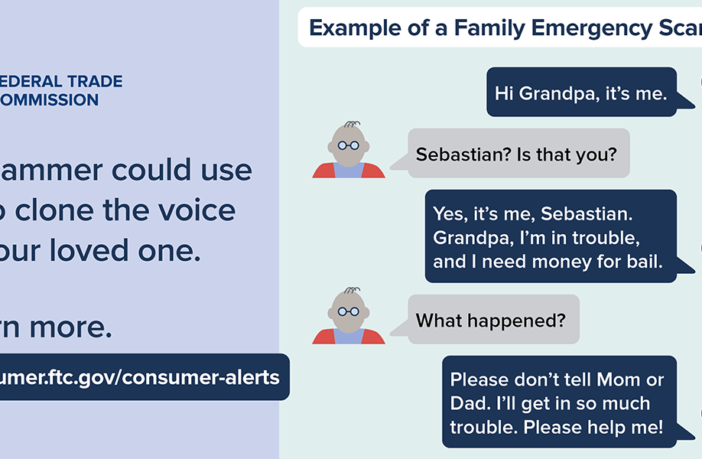

The Federal Trade Commission also has issued warnings about scammers employing artificial intelligence to clone people’s voices, leading to distressing situations for victims nationwide.

In May, McAfee Corp. published “The Artificial Imposter,” a report on how far reaching AI scams have become.

In a news release, McAfee said it surveyed 7,054 people from seven countries and found that a quarter of adults had previously experienced some kind of AI voice scam, with 1 in 10 targeted personally and 15% saying it happened to someone they know. Seventy-seven percent of victims said they had lost money as a result.

Further, McAfee discovered that 53% of all adults share their voice online at least once a week, with 49% doing so up to 10 times in the same period.

The company said that practice is most common in India, with 86% of people making their voices available online at least once a week, followed by the U.K. at 56%, and then the U.S. at 52%.

“A quarter of adults surveyed globally have experience of an AI voice scam, with one in 10 targeted personally, and 15% saying somebody they know has been targeted,” McAfee researchers wrote.

“Overall, 36% of all adults questioned said they’d never heard of AI voice scams indicating a need for greater education and awareness with this new threat on the rise.”

Separately, AARP, in collaboration with the National Newspaper Publishers Association (NNPA), has been actively raising awareness about the pervasive issue of scams and fraud.

According to recent AARP/NNPA roundtable statistics, seven in 10 Black adults believe that scams and fraud have reached crisis levels.

Moreover, 85 % of Black adults agree that victims should report these crimes to law enforcement.

Scams are particularly pronounced within the Black community, underscoring the urgent need for collective efforts to address fraud victimization.

There are steps individuals can take to avoid falling victim to AI voice scams.

“First and foremost, if you get a phone call that sounds like a loved one is in distress, do everything in your power to make contact with the person on the other end of the line,” Steve Grubman, the Chief Technology Officer with security firm McAfee, told ABC News.

“Ask a question that only the person on the other end of the line should know the answer to. Well, can you remind me what the last movie we saw or what did we have for dinner when we ate together last?”

Grubman added there individuals also can take steps from being targeted.

“Limiting their public digital footprint is key,” he remarked.

“Cleaning up your name, address, personal details from data brokers makes it more difficult for scammers to create the narratives that they can use to build a scam.”